Why most businesses can afford to build a web app, but not the conversational one with the same functionalities? This simple question motivated us to take on the challenge of democratizing conversational user experiences.

Before OpenCUI, we've been working in the field of conversational user experience for almost seven years, from voice assistants to chatbot development platforms. We have gained valuable insights into conversational interface development:

1. Voice-powered virtual assistants are very limited in helping people

In 2017, we built an intelligent skill search engine based voice assistant, which has complete experience of understanding and execution. And launched it on LeTV phones, Lenovo phones, Gome phones as their native system voice app. After launching, we got robust performance, with over 0.5 million daily queries and 2.5 million weekly active users.

We also provided a most efficient skill extension model, in which users/developers can teach the skill by demonstrating it to the assistant and publish this skill on our skill platform, so that all users can use this published skill and further check the usefulness of the skill.

In the beginning, we thought it was a light model with easy operation, because:

1. Apps can connect to the assistant automatically so that developers don’t need to know APIs of every APP.

2. Developers don’t need to spend lots of time and energy to negotiate integration with third party APPs.

3. Efficient and various ways to generate skills to help product skills grow exponentially.

But it backfired. There is a limit to what we can do and how much we can help. Apps always change too much and too fast in China, and different users often use different versions of the same app, that allows the same skill to be derived into many different versions unconsciously. So it is impossible to cover everything from the interface level. But from the API level, app developers will not expose that too much.

Since user interface is largely business logic dependent, business logic can and will vary from business to business, instead of building entire conversational apps for businesses, we aim to provide conversational interface building tools that empower business developers to build conversational experience themselves. Therefore, we decided to open up our skill platform that we used internally to extend mobile app skills and allow developers to use it to provide more possibilities.

2. Conversation driven approaches platform can not work well with business

As the skill platform was used to extend app skills in the past, its design was initially based on conversation. So we quickly found out that business logic is typically described as processes, full control of each step a user takes is possible in graphical user interfaces but not in conversational interaction.

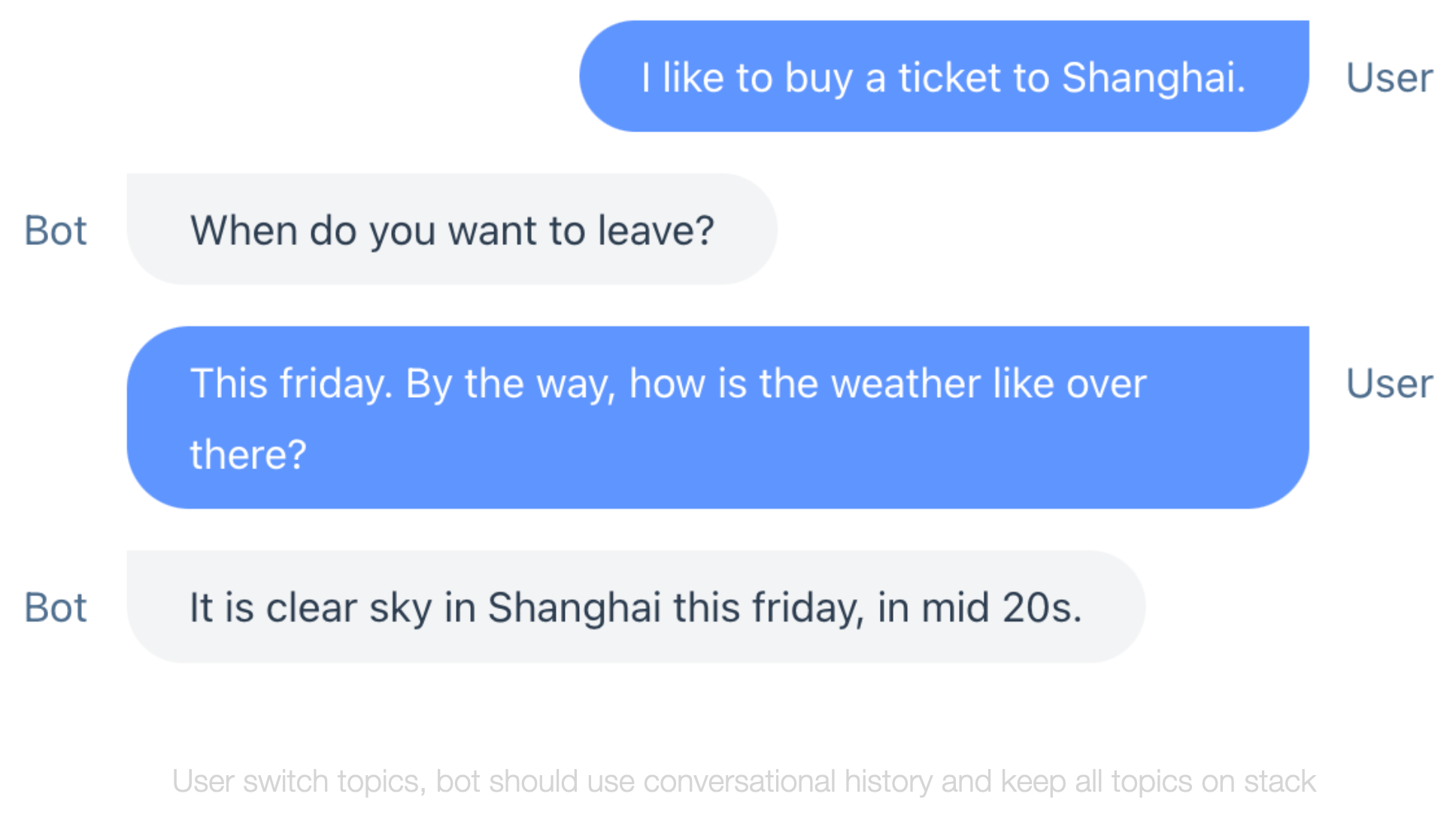

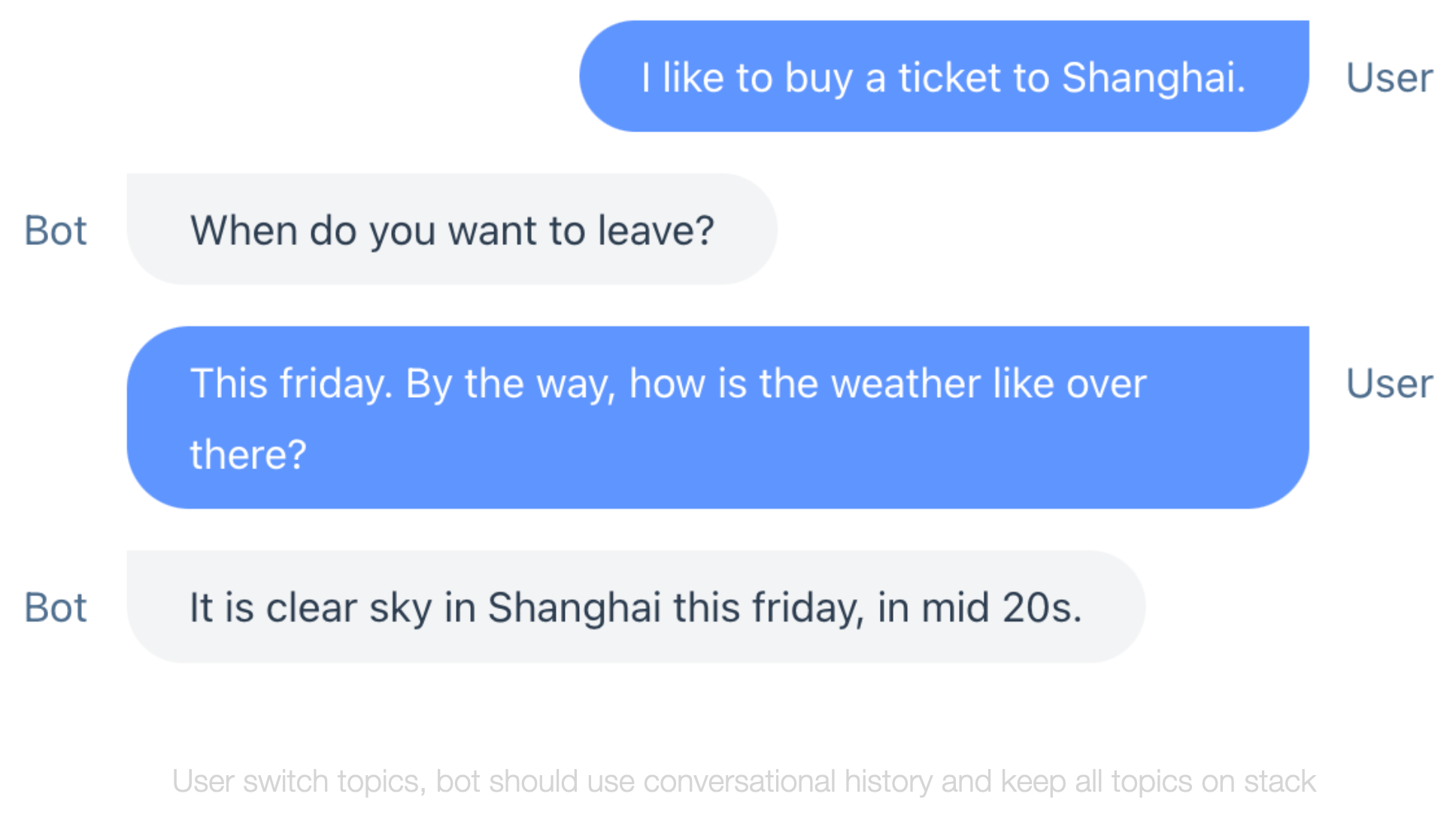

User's expression is random and diverse, and they will not follow the established route.

For conversational user interface, users can express anything anytime. This is advantageous for users, since now there is no learning curve for them to acquire services, as they can dictate what service to get directly. What is more, during conversations, a user might switch topics without providing all the information needed. Chatbot should use conversational history to automatically complete these user utterances, and and figure out what they want. Without some sort of factorization, the possible conversational paths needed by flow based modeling approach grows exponentially with complexity. Thus flow based approach to define conversational interaction becomes prohibitively costly.

Need expensive expertise in machine learning and natural language understanding

Understanding human language is hard as the different texts can mean the same and also the meaning of the same can change by contexts. This is one of the key complications of building conversational user interface. The popular approach relies on standard ML/NLU tasks like text classification and named entity recognition. While these standard tasks are well studied, applying them to new business use cases requires serious customization, which typically call for expensive expertise in ML/NLU. Therefore, it is not easy for regular dev team to customize for any use cases. In fact, accuracy is not the most important metric when it comes to dialog understanding. To deploy a chatbot into production, everything needs to be hot fixable by the operation team.

In conclusion, if a solution takes too long, costs too much, then it is not a commercially viable solution.

3. Conversational interface development should start from service

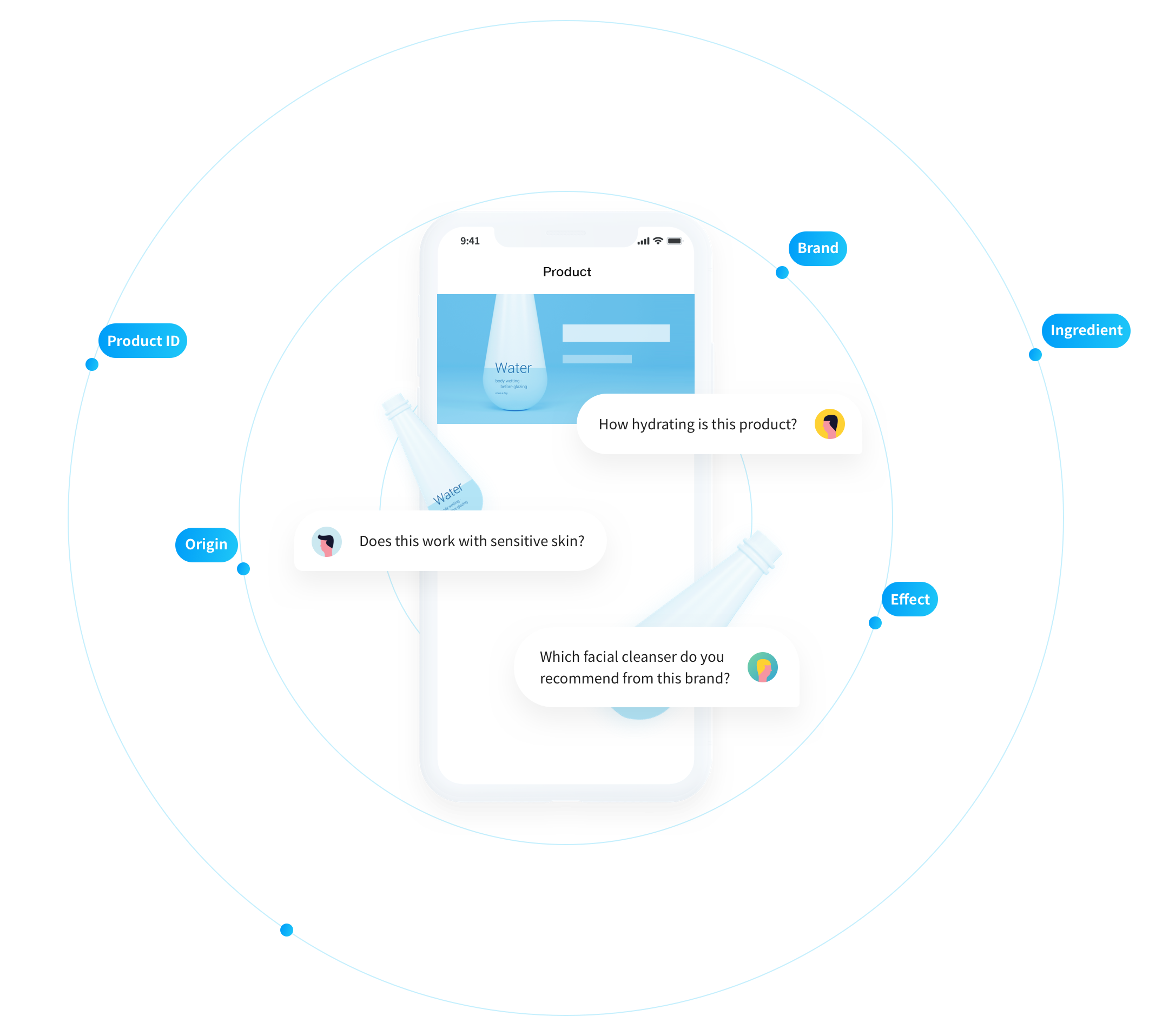

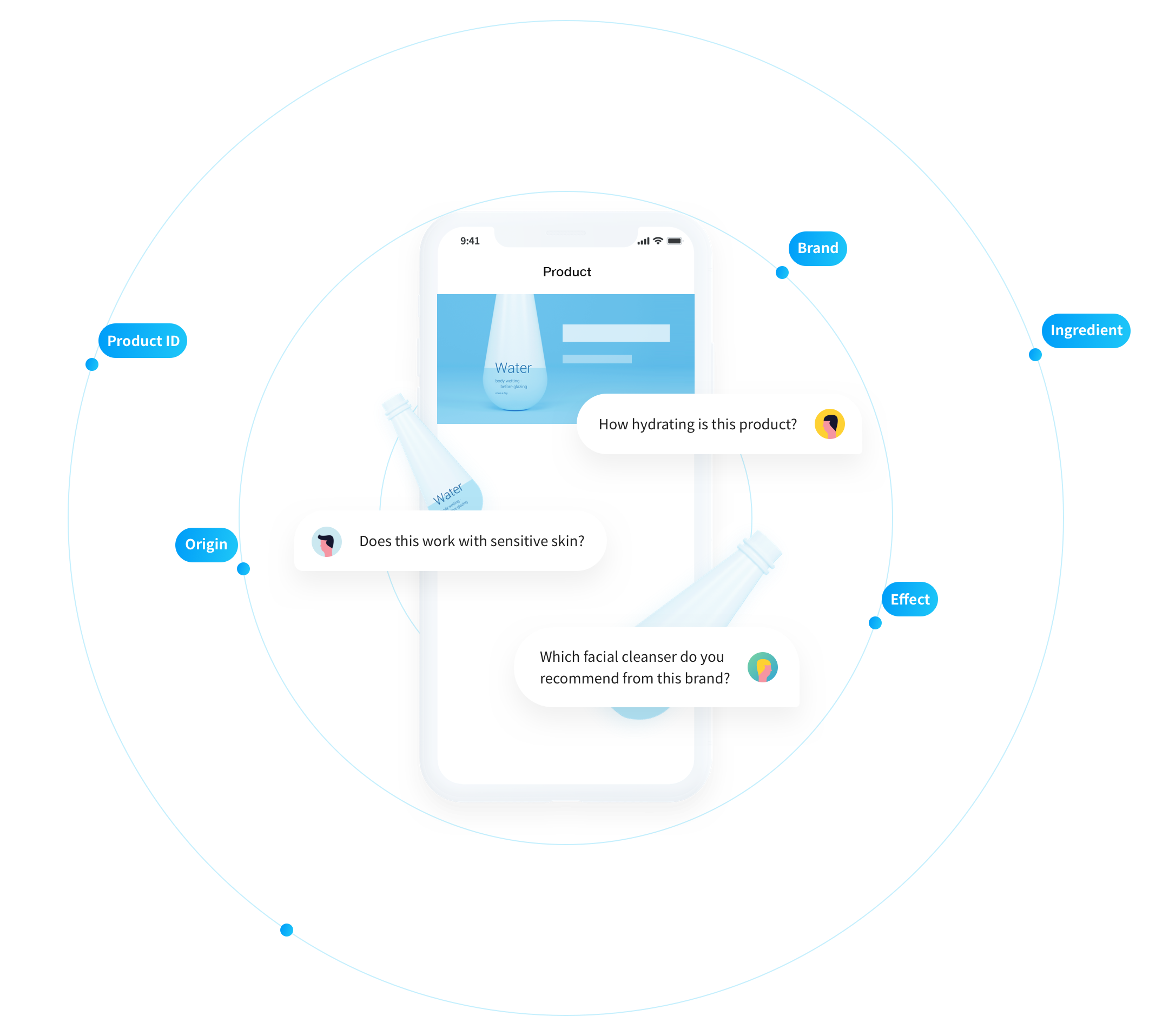

Services are the reason that businesses build chatbot. Without first fully deciding on what services are provided, it is impossible to design this user interface part as there is no focus. Instead of focusing on the conversations, conversational interaction development should be driven by service, where actual business logic is implemented, usually accessible via API calls, and provided by backend team.

API Schema provides natural boundary for both design and implementation. Given the set of APIs, it should be immediately clear whether given conversation is relevant or not.

API schema typically is a result of careful deliberation between the product owner and software architect, so it is usually normalized to be minimal and orthogonal. This means the similar functionalities are generally serviced by the same APIs, so there is no need to create the equivalency between user intention at language level, as all we have to do it mapping language toward the APIs.

Schema concisely describes services. With schema decided, teams with different responsibilities design and build upon that independently, without worrying about how other parts are handled.